2018년 7월 30일. 짬내서 스크립트 몇 개를 정리해본다.

Reclaim policy를 Retain으로 바꾸기

Persistent Volume의 Reclaim Policy가 Delete이면 데이터를 잃어버릴 수 있다. 그래서 보통 StorageClass의 Reclaim Policy를 Reclaim으로 잡는데 default Storage Class의 Reclaim Policy가 Delete인 탓도 있고 하여 간혹 개별 PV의 Reclaim Policy를 수정해야 한다. 아래 스크립트를 이용하면 Reclaim이 아닌 PV를 찾아서 모두 Reclaim으로 바꾼다.

이런 작업은 하루에 한번 정도 자동으로 수행하면 여러 사람을 구제할 수 있다.

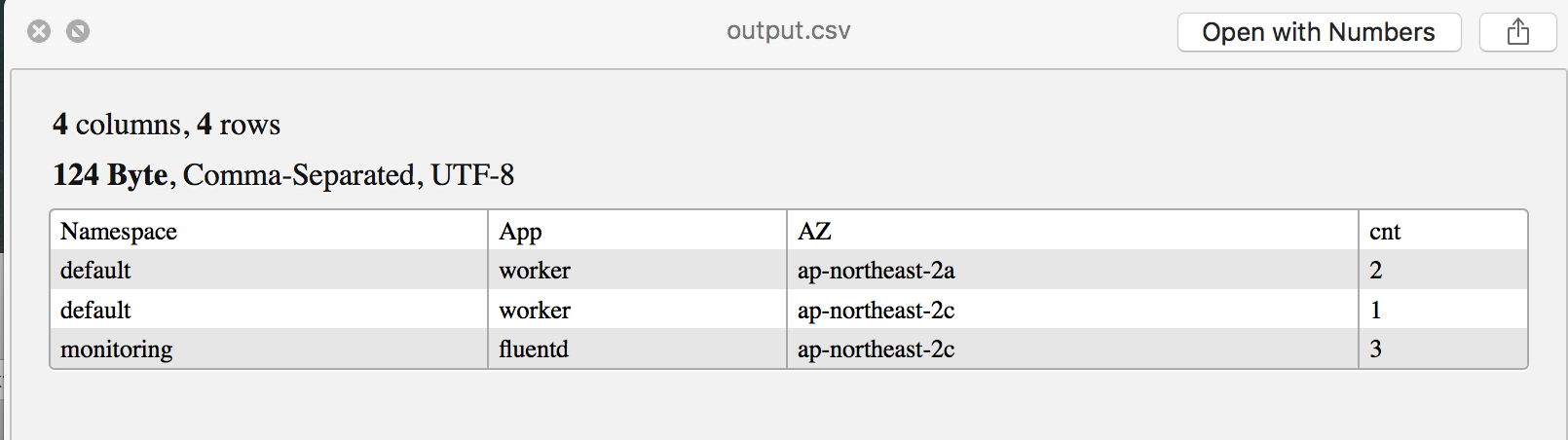

AZ별 애플리케이션 배포 현황 보기

애플리케이션이 AWS Availability Zone에 골고루 배포되어 있는지를 알고 싶을 때 유용하다.

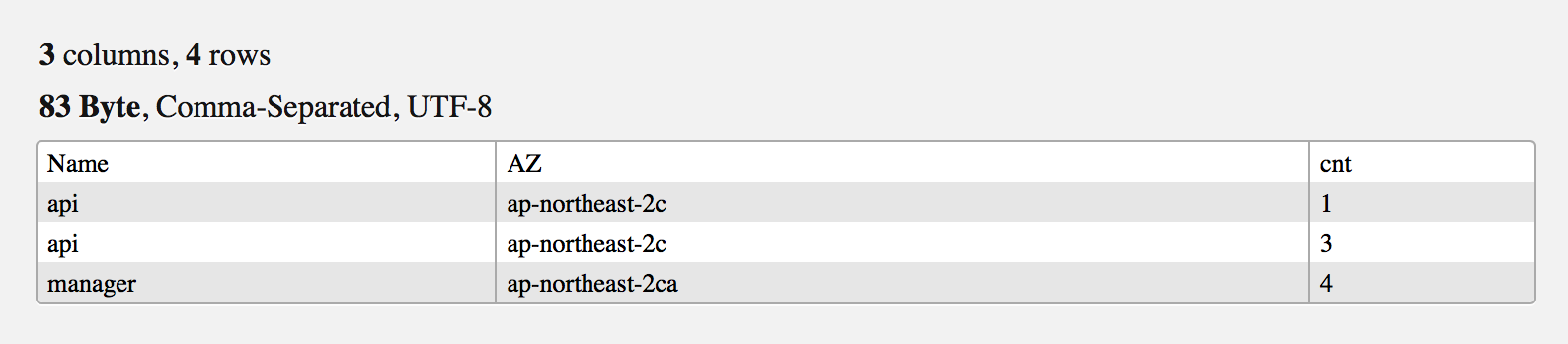

AZ별 EC2 인스턴스 분포 현황 보기

Kubernetes에 국한된 건 아니지만 EC2 인스턴스가 여러 AZ에 골고루 배포됐는지 확인할 때 유용하다.

Author Details

Kubernetes, DevSecOps, AWS, 클라우드 보안, 클라우드 비용관리, SaaS 의 활용과 내재화 등 소프트웨어 개발 전반에 도움이 필요하다면 도움을 요청하세요. 지인이라면 가볍게 도와드리겠습니다. 전문적인 도움이 필요하다면 저의 현업에 방해가 되지 않는 선에서 협의가능합니다.

[…] K8s on AWS 관리 스크립트 (2018-07-30) […]